We are telling here about our development of a VR showroom that allows you to create a dance show in real time.

Content:

- VR show in real time

- We began from laser show Avatar

- We drew inspiration from Adidas work

- The visuals were created in TouchDesigner

- We used Kinect first but abandoned it afterwards

- We purchased VR for work in 3D space

- We used Python for the development of some effects

- We made the footage for the show look real

- We have plans for creating the costume and visual effects program

VR show in real time

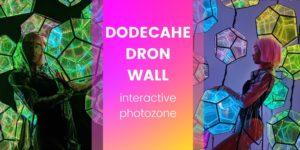

VR showroom is a software with ready-made effects for shows. The effects created in TouchDesigner are displayed on the walls of the room with the help of projectors and are managed by game controllers in real time. Pre-selected footage turns our development into a full-fledged show, the effects of which can be changed depending on the needs.

We began from laser show Avatar

In 2011, the founders of ETEREshop Sergey Voytovich and Marina Goretskaya created the Avatar laser show.

The essence of the show is as follows: first, the dance is staged, it is filmed, and then the artists completely draw and sketch the dance show. As a result, two actions take place on the stage at once: a learned dance and an animation drawn from a script, which is displayed on the wall behind the dancer. This process was very complex and expensive to manufacture.

– Three or four years ago, I came up with the concept of a system that tracks the dancer’s movements in real time, and the visual is reproduced in the background synchronically.

We began to think about how this could be done. We stumbled upon the Perception Neuron suit, which consists of a system of neuron sensors. It allows you to load a human skeleton into the play space.

In 2019, at an exhibition in Frankfurt, we met with the developers of this suit, decided to work and collaborate, but it froze: at first we did not have time, and in 2020 the world was swept by the coronavirus pandemic, ” – says Sergey Voitovich, founder of Etereshop.

In parallel with this, the development department tried to reproduce the project found on GitHub. It was an open source development that uses Esp8266-12E microcontrollers and mpu6050 gyro accelerometers to translate the human body into skeletal animation. This happens due to motion capture sensors attached to the person.

At the beginning of 2020, the development department of ETEREshop started the creation of a new product, which should eventually become an interactive VR showroom with a variety of lighting effects.

For our programmers and designers, this was a completely new task: none of the guys had ever created effects in TouchDesigner before. But all the difficulties were nothing for them and the developers plunged headlong into the workflow.

We drew inspiration from Adidas work

We looked at the developments of brands like Adidas with their Running Cube holographic projection and were inspired by the 4D performances of Magic Innovations. Nevertheless, we went further and created not just a project with beautiful animation, but also completely turned it into an interactive development with a full-fledged show.

The visuals were created in TouchDesigner

At TouchDesigner, the guys started working with an interactive sandbox project, which was finished in May 2020. After that, we started to work closely on the effects for the new VR development.

Destinctive feature of TouchDesigner is that it is a visual real-time programming environment, aimed at creating 2D and 3D visualization.

The program has not a low threshold of entry, but as you master it, it becomes a simple and understandable working environment. It supports out-of-the-box equipment and data transfer protocols common for shows.

It works as follows: ready-made “containers” (store a network of nodes) programs are connected to equipment (kinect, virtual reality system and projectors). Then they are connected to the effect blocks and produce an interactive result in real time.

Effects may vary depending on how containers and blocks are connected to each other.

We used Kinect first but abandoned it afterwards

There are two people working with TouchDesigner in the ETEREshop team: designer Katya and programmer Tolya. At first, they watched YouTube videos of the effects created in this editor and designed them on their computers.

In parallel with this, the developers began to use kinect – a contactless touch game controller. It completely repeated the movements of a person, which were displayed on the wall with the help of a projector. It works like this: put the kinect in front of a person. The equipment recognizes parts of the human body and translates them into 2D or 3D animation (depending on the purpose).

The kinect idea worked, but the developers stumbled into several issues that needed to be solved. First, kinect has a fairly small capture area. Secondly, it is not suitable for using the technology in three-meters rooms, for example, in a square room, where the image must be projected onto all 4 walls.

– In order to make the correct effects in a three-meters room using kinect, you need several controllers. The technology used does not allow this. Besides, there is an issue: how to combine a picture from 4 devices into one at once?, – asks Tolya, the programmer.

We purchased VR for work in 3D space

– We needed a control system to create effects in 3D space. We looked through different options, but we did not find anything better than the virtual reality system. For our purposes, HTC Vive Pro came up, with the rest of the systems (for example, neural network methods using conventional cameras) there were problems, mainly of a technical nature, – continues Tolya.

We used Python for the development of some effects

What concerns the effects, they were improved along with the guys pumping in TouchDesigner. The environment itself implies not only graphical programming, but also textual programming.

The languages Python (for scripting) and C (for working with GLSL) is an integral part of TouchDesigner. The guys had to improve their knowledge in these areas in order to achieve better results in a limited time frame. For example, one of the side effects found on the network was fog, which did not work correctly with fast movements of a person (in a dance).

Tolya figured it out: he completely replaced the block that was responsible for the interaction of the effect and corrected the rendering. In the initial version, the fog effect did not keep up with the movements of a person: sometimes it did not load, and sometimes it completely missed the command. The new block made the effect run smoother and faster.

Some of the effects were too tough. There were cases when guys found cool solutions, but could not repeat them due to the fact that they were written using GLSL (OpenGL Shading Language).

In the case using Python, everything is much simpler. For example, using the code, Tolya prescribed a timer for the effects, which automatically changes them according to a given time. Due to Python, some effects have performance tweaks. For example, if the computer lacks power, you can change the setting and part of the effect will be rebuilt automatically so that it works correctly in the end.

It took developers from one to three days to create one effect, depending on the complexity of the work. After gaining experience, the time to create some was reduced to several hours.

We made the footage for the show look real

Together with the developers, the guys from the marketing department also worked on the effects issues. The task of the photographer Tony and the videographer Sergey was to make the effects not only look chaotic, but to integrate them smoothly into the overall show.

– It turned out that the effects came out completely different and did not match with each other in any way. We had to make sure that all this in the end was a full-fledged show, – says Tonya.

To do this, Tonya and Sergey began to think over not only the color solutions of the effects, but also footage – videos that would change against the background along with the specified effects. This solution allowed the dancer to switch smoothly from one effect to another and combined the disparate visuals into a single theme.

For the first test show, 10 effects were developed. It took four rehearsals to shoot it: the dancer had to deal with the controllers and not only learn their mechanics of work, but also connect it all in the dance.

“The most difficult thing was to combine the effects in one dance,” says Vika, the choreographer. The aim was to blend them harmoniously with the music and smoothly transit into each other.

Vika was the first one to work with kinect. Then the effects were projected onto one wall and did not include working with controllers. After the virtual reality system appeared, she joined the work out of the first show. Together with Tonya, they thought over movements, transitions, and selected music.

With the controllers, according to Vicka, there were no problems. She quickly learned how to manipulate effects and was not afraid to experiment with movements. Nevertheless, the rehearsal took a long time, but the result was worth it. See for yourself:

We have plans for creating the costume and visual effects program

The ETEREshop team did not completely abandon the development of the costume, about what we talked at the very beginning of the article. Now Tolya is working on the creation of gloves. If everything works out, they will replace the VR controllers and unload the computer system, since the VR system is quite demanding on the capabilities of the computer itself.

Up to date, the programmer has achieved good work of the angles of inclination with gloves. The team still needs to work out the working process with positioning by coordinates, i.e. human movement.

– We want to make a costume and a program with a set of visual effects. It will be possible to switch them on consoles and form a dance performance in real time, – says Sergei Voitovich, the founder of the company.

For the software to work correctly, you need powerful equipment: four laser projectors, a virtual reality system and a computer with a powerful video card.

Now in test mode, we have worked out 3D effects, made a show with a dancer and saw how the effects work. The next step is to create 3D models and renderings to demonstrate the product.